The Singularity Paradox: AI and the Edge of the Unknown - Part 2

Patterns in Chaos – The Emergence Phenomenon

(Part 2 of a three-part exploration of AI’s past, present, and the possibilities ahead)

Introduction

The most fascinating advancements in AI aren't just about speed, power, or accuracy—they’re about surprise. Unexpected abilities, sudden leaps in performance, and the emergence of skills that no one explicitly programmed into these systems are redefining what we know about intelligence.

This phenomenon is known as emergence—when complex, unexpected behaviors arise from simple interactions. It’s one of the most mysterious and important concepts in AI today.

In this part of the series, we’ll dive into how AI systems display emergent behavior, why it matters, and what it tells us about the future of intelligence—human or otherwise.

Let’s explore the patterns hidden in the chaos.

Exploring a Theme: Emergence

Emergence: the phenomenon where new and often unexpected patterns, properties, or behaviors arise in a system as a result of the interactions between its components.

-ChatGPT

Emergence highlights how simple rules or interactions at a micro-level can lead to complex and often unpredictable behaviors at a macro-level.

These emergent properties are not present in the individual components themselves but arise when the components come together and interact in a specific manner. An emergent property is not merely the sum of the properties of system components. Instead, it's something new and different that arises from their interactions.

To me one of the most intriguing examples of emergence comes from Stephen Wolfram’s book “A New Kind of Science”.

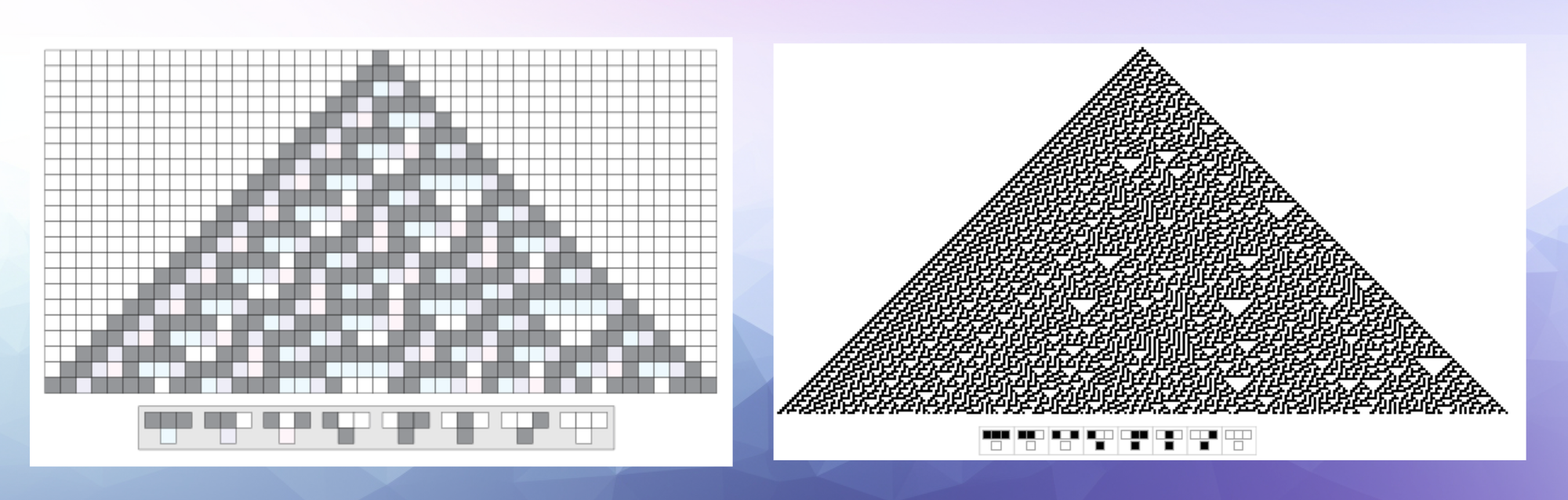

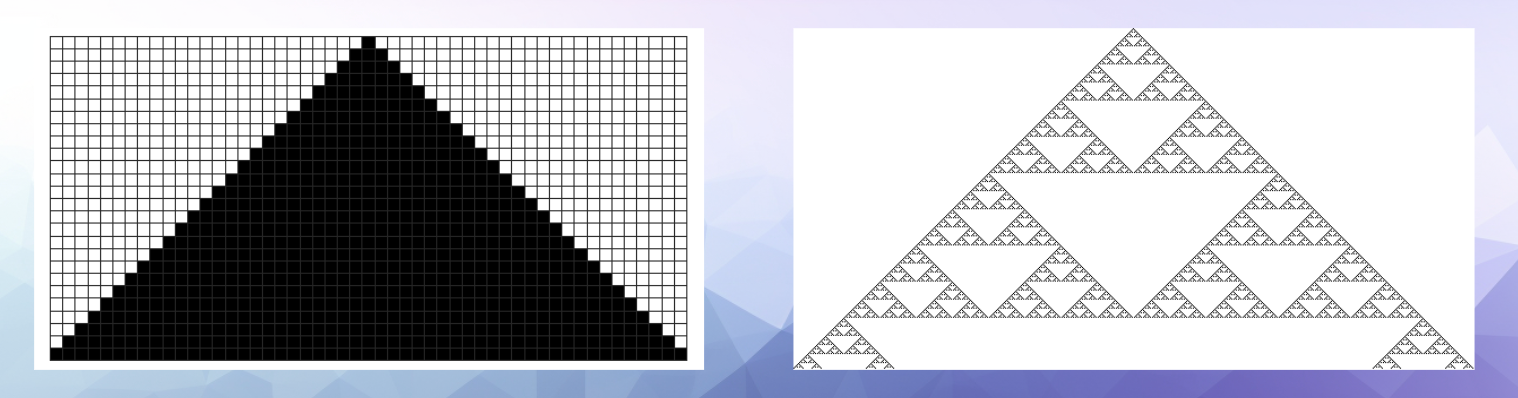

In this book he studies the behavior of cellular automata, which are grids of cells that evolve over time based on a set of rules and the states of neighboring cells. These systems are very similar to the neural networks that underlie AI in that they show how very simple systems can lead to very complex patterns. Finally its important to note that these systems are deterministic, meaning that if you use the same starting conditions and rules you will always get the same results.

In the examples above we can see two examples of “one dimensional” cellular automata where each row of cells represents an iteration of the system. Each example starts with a single filled in cell or pixel at the top and a set of 8 rules for how the cells should evolve on each iteration. The example on the left shows around 20 iterations and the example on the right shows around 100 or so.

It’s hard to talk about cellular automata without mentioning the famous example popularized by the mathematician John Conway called the “Game of Life”. The Game of Life is a simple example where cells can only have one of two states: “dead” (black) or “alive” (white). Here’s a short video that shows some of the complex patterns that can arise from this system.

life within life

So you’ve got the Game of Life embedded inside the Game of Life. It’s turtles all the way down, or otherwise called a process fractal.

So back to Wolfram. In his early research he was literally defining many different rules and initial conditions and letting them run for thousands of iterations just to see what would happen. A lot of these systems would either die out, became static, or converge to simple repeating patterns:

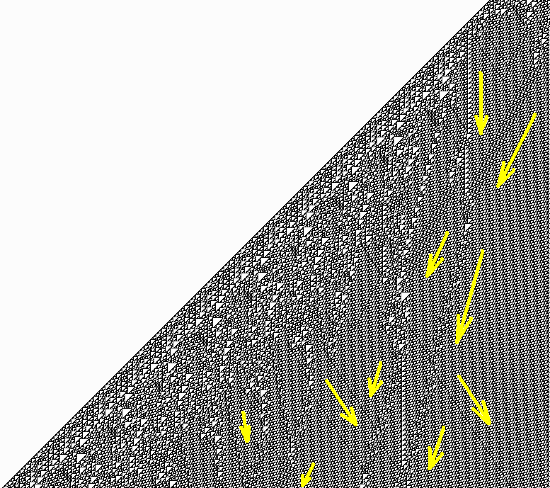

However...some systems displayed complex, chaotic, and seemingly unpredictable behavior:

This led to him defining the concept of “the principle of computational irreducibility” which states that some computational systems cannot be predicted, only processed.

This means that its literally impossible to predict the behavior of the system mathematically, even if its deterministic. There is no "formula" to give you the answer. To discover how the system will behave in the future, it has to be “played out”.

In AI terms, this means that you can’t predict the capabilities of an AI system based on its training data, and you can’t predict the output of an AI based on its input.

Emergence in AI

As a direct example of this, it turns out that not only can AIs translate between languages they are trained on, but they can translate languages they’ve never seen before.

In the example below, researchers tested an AI’s ability to understand Persian, a language it had never been trained on.

For each test they simply increased the AI’s scale, such as the number of neurons in the neural network, while keeping the training data the same.

After a certain scale, for no discernible reason, the AI was suddenly able to understand Persian.

In another example an AI was able to do the same thing with Bengali. Note the mention of the “black box problem”:

This next example is a study of an AI’s “theory of mind” level.

Theory of mind is a psychological term that describes our ability to understand that others have thoughts, feelings, and beliefs that are different from our own. It’s the basis of empathy, perspective taking, moral reasoning, etc.

This study was done around March of 2023. They took models developed at different times and gauged their level of theory of mind as compared to a human. In 2020 it was around the level of a 4 year old child.

Two years later it was at the level of a 7 year old.

A few months later it was almost at a 9 year old level.

Finally, as of March 2023, it was at the level of an adult:

What’s possibly the most incredible aspect of these results is that prior to this study, the creators of these AI had no idea this capability even existed within the model.

Emergence challenges our understanding of intelligence. It’s not just about training data or the size of a neural network; it's about the complex, unpredictable behaviors that arise when systems interact with their environment.

As AI continues to evolve, we’ll see more examples of emergent capabilities—some that inspire awe, and others that may give us pause. But one thing is certain: emergence is pushing us into uncharted territory where traditional explanations fall short.

Coming up next...

In Part 3: The Singularity – A Journey Toward Truth, we’ll explore what happens when emergent intelligence leads to a tipping point—a shift that could change our understanding of reality itself.

See you in Part 3!