The Singularity Paradox: AI and the Edge of the Unknown - Part 1

The Dawn of AI – A Journey Through Time

(Part 1 of a three-part exploration of AI’s past, present, and the possibilities ahead)

Introduction

We live in extraordinary times.

ChatGPT was officially released on November 20, 2022, barely two years prior to the writing of this post. Since then the world has witnessed the fastest evolution of technological advancements in history in the form of Artificial Intelligence (AI). Change this fast is often unsettling, and many are fearful where this path will take us: will AI take our jobs? Will it take over and enslave us, or worse? Some are optimistic, describing and prophesying the incredible utopia that awaits us. Finally (and to me, most surprisingly), many are simply unaware, or possibly willfully oblivious, of the magnitude of the shift that is happening.

I would like to change that.

For all of my life, there have been two aspects of the human experience that filled me with an unquenchable thirst for understanding.

The first might be captured by the question: "what is Truth"? This is the question behind all of science, particularly physics, as well as all the religious and spiritual teachings of the world.

The second is simply: technology. I have always been at awe at what humans have been able to accomplish with knowledge and the desire to create. To me technology feels like pure magic, a magic that I yearn to understand and wield myself.

I have come to believe that my journey has not been a coincidence, and that these two sources of awe and wonder are beginning to converge. What this means exactly is still unclear to me, but I would like to take you on a journey of how I got here. It's a journey of discovery and possibilities. It's a look into where we came from, where we are now, and where we are going. It's a deep dive into the unknown...

Ready? Buckle up.

Artificial Intelligence

AI, or Artificial Intelligence, is the ability of machines to mimic or replicate human-like thinking and decision-making processes. It enables computers to learn from experience, adapt to new information, and perform tasks that typically require human intelligence

-ChatGPT

To set the stage for this deep dive, I'd like to take a (relatively) brief look at the history of AI. Please bear with me, as this overview will set the context for the rest of our journey.

- In 1943 there was the introduction of the neural network model. Initially this seemed very exciting and intuitive. The idea was simple: design and build a system that could mimic the architecture and behavior of the human brain.

- In 1950 Alan Turing presented the Turing Test which posed the question: can a machine be indistinguishable from a person?

- The 1960s-1970s brought forth the AI Winter due to the technical limitations of neural networks given the state of hardware at the time.

- In the 1980s technical breakthroughs drive renewed interest in neural networks.

- In 1997 IBM's Deep Blue chess computer defeats world chess champion Gary Kasparov. This was a huge milestone in that chess had long been considered by many (at least in the western world) a pinnacle of human intellectual capability.

- In the 2000s - AI technologies like speech recognition, image recognition, and recommendation systems become integrated into consumer products.

- In 2011 - IBM's Watson wins the quiz show "Jeopardy!" against top human champions. Watson’s victory in Jeopardy showed that AI could navigate the field of nuanced language such as puns and wordplay.

- 2016 - DeepMind’s AlphaGo defeats world champion Lee Sedol in the board game Go.

Master Go players typically rely on intuition based on a lifetime of experience. Another big difference between Deep Blue and AlphaGo is the fact that Deep Blue depended largely on built in, hard coded strategies provided by experts, while AlphaGo started from scratch. It first learned by watching expert games, but it mastered the game by playing itself millions of times.

AlphaGo also invented strategies that had never been seen before and displayed a masterful level of intuition for the game. In my mind this tells a story of a student studying the expert strategies of a master and eventually surpassing the master through its own creativity. As it turns out this is somewhat of a recurring theme in AI that we’ll see again later.

- 2017 - The “Transformer” architecture is introduced which led to the GPT (Generative Pre-trained Transformer) model.

- 2018 - OpenAI's GPT-2, a large-scale language model, showcases the power of generative models in producing human-like text.

- 2022 - ChatGPT is released and becomes the fastest growing app in history.

- 2023 - GPT-4 scores top 90% on a large set of standardized tests (compared to bottom 10% of GPT-3)

- 2024 - OpenAI's "o3" model scores 87.5% on the ARC-AGI, a benchmark testing Artificial General Intelligence (AGI), or how closely an AI can perform compared to a human in reasoning tasks. Comparatively GPT4o scored 5%.

AI Basics

One way to look at AI is to split it up into 3 theoretical types.

The first type is Narrow AI, which is AI thats specialized in a single task such as Image recognition, recommendation systems, and autonomous vehicles. Currently this is considered to be the only type of AI out of the 3 that exists.

The second type is called Artificial General Intelligence, or AGI. This is an intelligence that’s comparable to humans. If achieved this would be considered to be a major milestone for AI, however the general nature of this level of AI makes it difficult to define.

As an example the Turing Test was meant to define when AI became indistinguishable from a human. As AI evolved, there wasn’t a single moment when everyone agreed it had passed the Turing Test. Instead, as systems grew more sophisticated, people gradually began to accept that they had met or even exceeded the test’s expectations.

In the same way, there might not be a clear moment when AGI is universally recognized. Instead, as AI capabilities approach human-level understanding, there could be a gradual consensus that we have indeed reached AGI, just as it happened with the Turing Test. However, we might be much closer to this than we ever expected.

Finally there’s Artificial Super Intelligence, which is an intelligence beyond humans. Humanity has never encountered an intelligence greater than ourselves, and as we'll see later, there's really nothing that can be said about this.

Neural Networks

So, back to the first and arguably most fundamental development in AI: the neural network.

I’ll use the example of learning what apples look like to help provide a sense of how they work.

Imagine you're trying to teach a computer to recognize apples. Instead of giving it a strict set of rules (like "apples are red" or "apples are round"), you show it lots of labeled pictures—some of apples and some of other things.

A neural network is like a virtual brain inside the computer. It consists of layers of tiny processing units called "neurons." These neurons learn from the pictures you show, just like how our brain learns from experiences.

The more pictures you show, the better the neural network gets at recognizing apples. It adjusts and fine-tunes itself, just like how we learn from our mistakes.

Neural networks are actually pretty simple:

- Input Layer: you feed the pictures here (pixel data). Like our eyes seeing the images.

- Hidden Layers: These are the “thinking” parts. They process and analyze the images to find patterns.

- Output Layer: This is where the neural network makes its guess. It might say “I’m 90% sure this is an apple.

If you've heard the term “Deep Learning” - this is simply a neural network with many MANY hidden layers. For example GPT-4 has around 100 billion neurons and 100 trillion connections.

The takeaway point here is that neural networks are essentially a black box. Even AI experts will tell you that we have no idea what is really going on inside larger neural networks. We just know how to make them.

Traditional vs Generative AI

When talking about modern day AI its useful to make a distinction between “traditional” AI and “generative” AI.

Traditional AI is typically more passive and focuses on analysis and categorization, for example, classifying cute animal images.

In contrast generative AI actively creates content such as images, text, music, videos .

This is an example that I created running a model on my laptop:

As you can see the model generates images by starting with pure noise, or pure chaos, and then iteratively adjusting the pixels to more closely match the input prompt, very much like a painter starting with a blank canvas using a balance of intent and intuition to bring forth their creation...

Transformers: The Big Shift

Alright, lets talk about Transformers * insert obligatory transformer pun *.

In 2017 the “Transformer” architecture is introduced. This presented a new approach to deep learning models and quickly became the new “engine” for AI.

One benefit of the Transformer architecture was that it was a shift toward simplicity and generalization. This was actually continuing a trend that can be seen when comparing, for example, Deep Blue vs AlphaGo. Deep Blue was extremely complex and included hand crafted strategies for playing chess, while AlphaGo was much more generic and “learned” its strategies rather than having them pre-programmed. This generalization allowed Alpha Go’s successor, AlphaZero to master Chess and Shogi (Japanese Chess) on top of Go.

In the case of Transformers, this idea of making things simpler continued. These Transformers came from a study called "Attention is All You Need." Instead of using complicated methods from before, Transformers focused on improving an existing aspect called the "attention" mechanism. This lets them focus on the most crucial parts of the information they're given, similar to how we might highlight the most important parts of a book.

Another benefit was their shift towards methods better fit for parallelization compared to earlier methods which were more sequential.

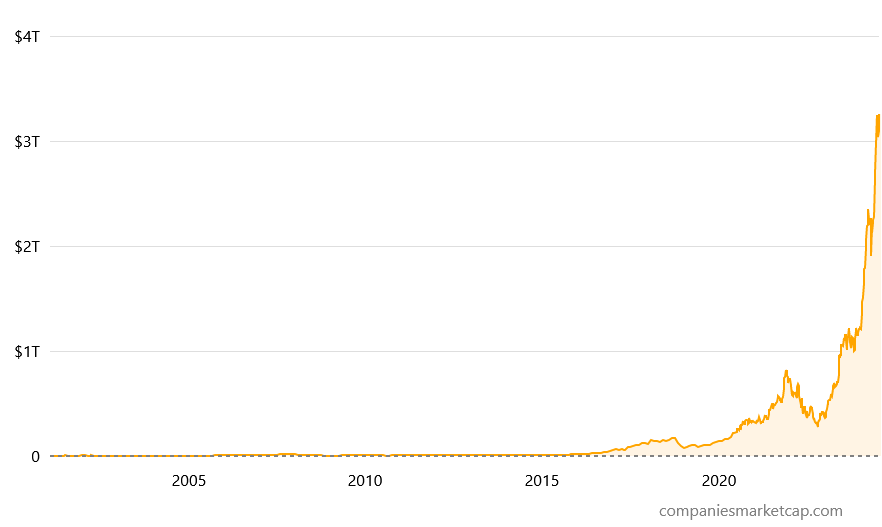

This made Transformers highly optimizable for modern hardware such as graphics processors, which was super convenient for graphics card companies like Nvidia who have since seen their stock market prices go through the roof and are now considered to be one of the most valuable companies in the world. In June of 2024 their market capital hit $3.34 trillion, overtaking Microsoft’s $3.32 trillion valuation.

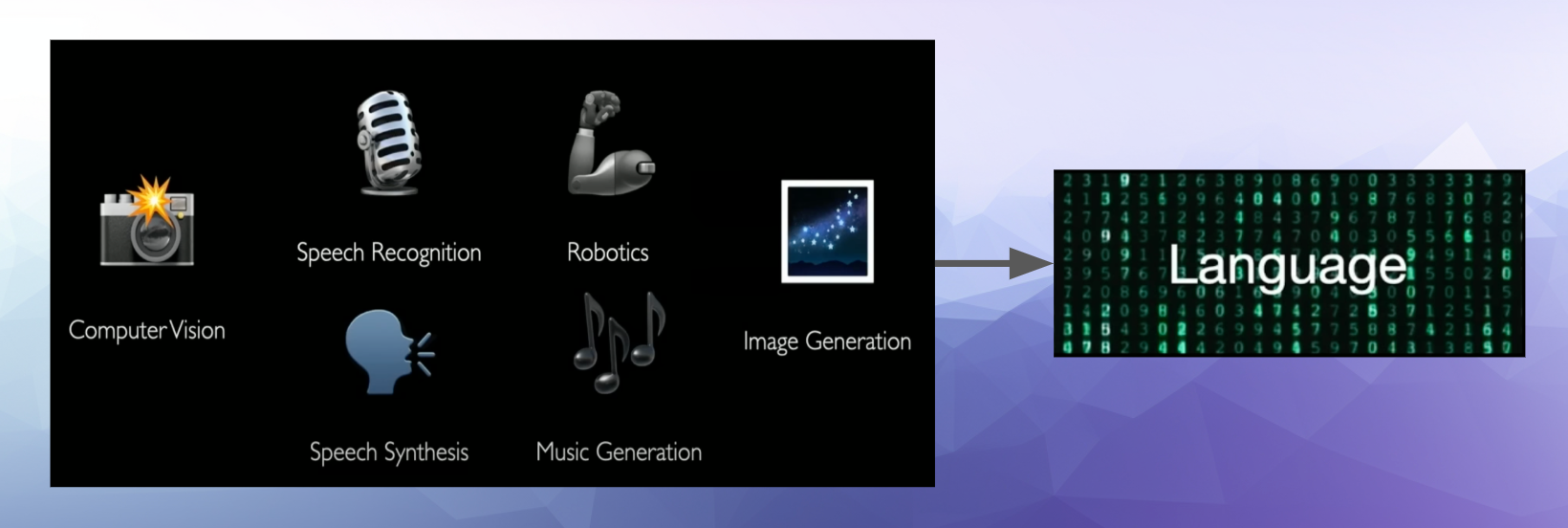

As with AlphaGo, the shift towards simplicity came hand in hand with a shift towards generalization. This made Transformers extremely versatile and capable of tackling almost any field.

While transformers did come with downsides, these were simple as well.

Basically, they required huge amounts of both compute power and data to train. It turns out both of these challenges are pretty straightforward to tackle if you pump enough money into it, and big tech companies (and more recently entire national governments) have been happy to do so. In fact just recently OpenAI a request for the US Government to build multiple 5 Gigawatt power centers. Just one of these would produce enough output to power a major city like Miami, and these would all be just for AI.

Possibly the biggest implication of this step towards simplicity and generalization is that we discovered that what used to be many different and distinct fields of research in AI have all reduced to one, which can most accurately be described as: language.

How AI (Large Language Models/LLMs) Work

Let's dig a little deeper to understand how these AI (or in this case Large Language Models or LLMs) actually work.

As it turns out, if you were to describe it in terms of text, these new AI systems are essentially just really really REALLY good autocomplete. This example shows basically how a LLM is trained. Now remember these LLMs are still simply neural networks at their core.

You give it a partial sentence as input, and the next word in the sentence as output. Just as you would expect with autocomplete the goal is for the model to guess, or learn, what the most likely next word in a sentence is.

It's easy to downplay the significance of what LLMs are doing here. While comparing it to "autocomplete" gives you an idea of what's going on, it doesn't do justice to the profundity of what is required to accomplish this, which is a deep level of understanding. Ilya Sutskever, one of the fathers of modern day AI, a co-founder of OpenAI, and (until recently) the Chief Scientist at OpenAI, beautifully exemplified this:

“Say you read a detective novel. It’s like a complicated plot, a storyline with different characters, lots of events — mysteries like clues. It’s unclear. Then, let’s say that on the last page of the book, the detective has gathered all the clues, gathered all the people, and is saying, ‘Okay, I’m going to reveal the identity of whoever committed the crime, and that person’s name is… — Predict that word.’”

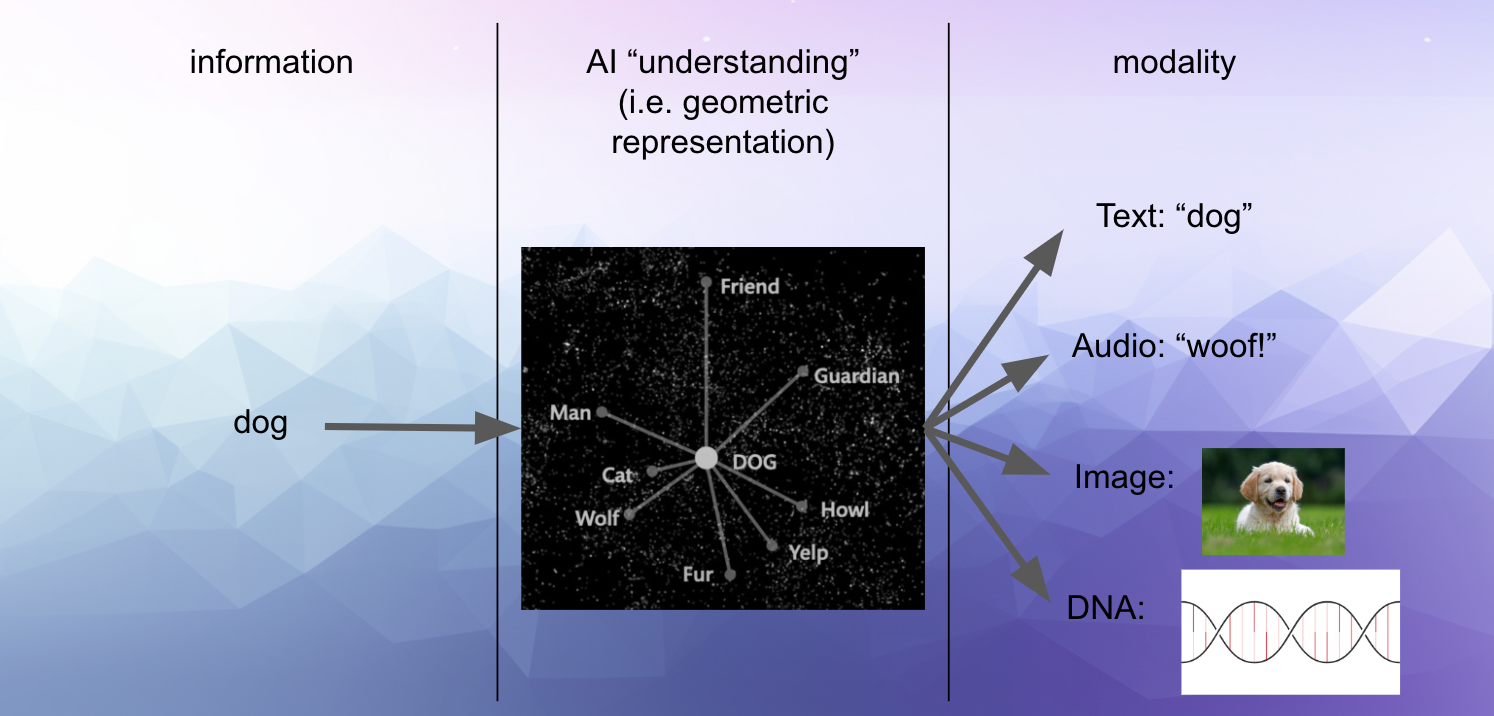

Compared to old models that used built in patterns like grammar rules, these models are actually learning what’s called “semantic pattern recognition”, which means they are learning the actual meaning behind the words as well as the relationship between words.

They do this by creating geometric representations called “embeddings”.

This geometric representation of the meaning behind the words is agnostic to any single language. The model is shifting from a focus on the data, such as the word “dog” to the actual information that is carried with the word.

It turns out if you map different languages and then overlay the shapes of these languages on top of each other, the word “dog” ends up being at the same location, even with languages that developed completely independent from each other such as English and Japanese.

Now, if you’re like me, this is about the point where the hair on your neck starts tingling and you realize that something very profound is going on here inside these black boxes.

But What Is Language?

This sort of begs the question then, so what IS a language. What falls into this concept that we’re calling “language”?

As it turns out...everything

This makes sense if you think about it in terms of meaning and information. A language is just the intellect putting labels on something that intuition just “knows”. Its how we transform the subjective into the objective.

This means these AIs are learning to speak the, quote, “language” of images, sound, code, DNA, music…the list goes on. For example, an AI can take a concept like “a website” and express that concept in the language of code.

In AI terms these different languages are called “modalities”. Here again we see how the information of the concept of a dog is learned and stored geometrically by the AI which can then translate that concept into these different modalities:

This may sound wild, but they can even learn to speak the language of your brain activity.

Just by looking at the fMRI data of someone looking at a giraffe, the AI can reproduce what the person is looking at by "translating" from the fMRI modality into the image modality.

Coming up next...

This concludes Part 1 of this series on the evolution of AI. We've explored its fascinating origins, key milestones, and the core mechanics that power today's AI systems. But we've only scratched the surface.

In Part 2: Patterns in Chaos – The Emergence of New Intelligence, we dive into one of the most profound aspects of AI: emergence. What happens when AI starts to exhibit abilities beyond its original programming? Why do these abilities seem to appear out of nowhere, and what does that mean for the future of intelligence—both human and machine?

Stay curious, and I’ll see you in Part 2!